Xbox 360 HDMI Display Settings Guide

Note: This guide has been publicly available in doc form in various places around the web since 2010. You can download a copy from my Academia research page here.

I. Display Discovery

II. HDTV Settings

III. HDMI Color Space

IV. Reference Levels

V. Sources

Introduction

Following the release of the HDMI-equipped Xbox 360 in 2007, Microsoft issued a system update which added a set of new options under System Settings specifically for the HDMI interface. With its unified transfer of digital audio and video over a single cable, HDMI has quickly become the connection of choice in most homes. The HDMI spec itself has gone through a number of revisions since its inauguration in December of 2002, with terminology, feature support and cable designations all shifting ground. Getting everyone in the consumer electronics industry—from display manufacturers to set-top box producers to content creators—to speak the same language has proved a considerable challenge. HDMI-related settings buried in home theater equipment menus are often inconsistently labeled and accompanied by particularly vague descriptions. Thus it would appear the confusion does not begin and end with the consumer, but extends to manufacturers as well.

This guide is an attempt to demystify settings specific to the HDMI interface commonly found on HDMI-compatible devices. While this write-up will make clear the preferred settings for the Xbox 360 console, the information here is equally applicable to other A/V equipment. As will be apparent, there are no universally preferred settings due to the intrinsically complex nature of video interfacing. However, with minimal effort you can ensure that your Xbox 360 and other devices are configured correctly for the best possible image and audio quality.

Note 1: The HDMI out port is located directly under the AV cable slot, to the right of the serial number and manufacturing date label. You must have your Xbox 360 connected via HDMI to a display device to make use of the settings described here. If connected via different cables, many of these settings will be grayed out.

Note 2: The Xbox 360 console supports up to HDMI Spec 1.2. As the specs are backward compatible, there should be no interoperability issues when using AV equipment with different HDMI version numbers. Do note that feature availability is governed by the principle of lowest common denominator support: in order to take advantage of features found in any given spec, all devices in the chain must support that spec.

Note 3: A brief note about HDMI cables. Much internet bandwidth has been spent on protecting consumers from falling prey to Monster and similarly overpriced cable dealers. With digital signals, either the 1s and 0s are transmitted in their entirety, or they are not and the errors are immediately obvious; there is no degraded middle ground. Marketing duplicity notwithstanding, there is zero difference in audiovisual quality between the cheapest HDMI cable versus the exorbitantly priced “gold-plated“ Monster-branded bunco. Simply buy a High Speed cable from Monoprice and save. (Note that runs over 50 feet may require an active cable or repeating device to transmit the signal, as there are tolerances built around distance for the HDMI spec. But again, the cable will either work perfectly, or it won’t and you’ll be able to tell right away; if it works, buying any other cable won’t improve the signal in any way.)

Lastly, the labeling of HDMI connectors has gone through a number of iterations over the years. On the heels of Spec 1.3, the HDMI consortium sought to streamline the designations to minimize confusion. The previously used ‘Version’ and ‘Category’ monikers have now been relegated to two simple groupings: Standard and High Speed. A High Speed with Ethernet HDMI lead will prove hiccup-free for 99% of all A/V scenarios.

Getting Started

On the Dashboard, navigate to Console Settings –> Display, and you will see a list of output options. We will be discussing the following four items, in this order: Display Discovery, HDTV Settings, HDMI Color Space and Reference Levels.

Display Discovery

The 360’s Display Discovery feature makes use of EDID to automatically configure the output resolution for your Xbox 360. When this is enabled, the console will query your HDTV for its native resolution and adjust the console’s output resolution to match. This feature only applies to output resolution and does not impact color space or reference level settings. DD is usually harmless, except in the rare situation that your HDTV’s EDID is read improperly or returns invalid data. Either way, feel free to disable this setting and manually select the output resolution in HDTV Settings.

HDTV Settings

This screen allows you to select the output resolution of the Xbox 360. Generally, the output resolution of your A/V devices should match the native resolution of your display. For example, if your HDTV is 1080p (marketed “Full HD” by many manufacturers), your equipment should output 1080p. This will allow you to enable “Dot by Dot” features on your display, which preserves 1:1 pixel mapping of the input signal with zero overscan. However, several caveats apply:

- Scaling performance varies across A/V equipment. The rule of thumb is to allow the device with the superior upconversion software to handle the scaling. The graphics scaler in the X360 is top-drawer and optimized for graphics with minimal scaling artifacts. So if your display is native 1080p, for example, this would mean setting your Xbox 360 to 1080p, even if the game in question does not run natively at 1080p (more on this below). For video content, however, a high-end HDTV, AVR or pre-pro will generally have better upconversion than the Xbox 360. So for video it’s optimal to match the X360 output resolution to that of your source. For example, if the source is a DVD (480p), set the X360 to 480p and outsource the upconversion to the downstream device. If you have an intermediate device (e.g., AVR, pre-pro) between your X360 and your display that offers high quality upconversion you would again set the X360 output resolution to match the source resolution for video content, then set the intermediate device’s output resolution to match your display’s native resolution. This allows the superior device (the intermediate) to handle all image upconversion. In short, for games, it is recommended to allow the X360 to scale to the native resolution of your display, and to transfer the responsibility of video scaling downstream.

- Ideally, all source content would match the native resolution of your fixed pixel display, allowing you to avoid the lossy process of image scaling altogether. For example, sending sub-1080p content to your 1080p display will require the signal to be upscaled to 1080p at some point in the imaging chain. However, content resolutions can vary widely, especially for video games. Most Xbox 360 titles are not native 1080p. Many are below 720p, or somewhere in between 720p and 1080p. (When you look on the back of the game box, you will see: ‘HDTV 720p/1080i/1080p’. These are simply supported resolutions and do not refer to the resolution in which the game is internally rendered. Microsoft mandates that all games released on their platform are compatible with the above standard ATSC resolutions, but the GPU may draw at any number of non-standard resolutions before being scaled to the output format.) For games running at non-standard resolutions, configuring your Xbox 360 to send 720p to your 1080p display will cause two scaling steps to occur: 1) The X360 will first scale the source to 720p and 2) Your display will then upscale from 720p to 1080p. This dual scaling can introduce unwanted artifacts in the image as well as increase input lag, both of which are detriments to gaming. As above, this can be avoided by aligning the X360’s output resolution with that of your display.

- Any content that is truly native 1080p (e.g., a handful of Xbox 360 titles, some Xbox One and PS4 titles, Blu-ray movies) should be output in 1080p to a native 1080p display, eliminating any scaling of the signal.

- Just because you set your Xbox 360 to 1080p and you see a signal on your HDTV does not mean your HDTV is native 1080p. Some “720p” displays, for example, accept 1080p but then downscale to their native resolution (which is generally 1366×768; see below). Make sure you know the resolution of your HDTV.

- While many HDTVs are marketed as “720p”, no LCD TV or computer monitor or plasma display sold in the States actually has a native resolution of 720p (1280×720). Due to manufacturing considerations, any LCD TV or monitor labeled “720p” actually has a native resolution of 1366×768 (WXGA), while the few lingering plasma displays marketed as “720p” actually have a native resolution of 1024×768 (XGA). Due to the tricky, multi-step resizing required, and the fact that 1:1 pixel mapping is impossible as there is no popular content encoded in 768p, it is recommended to avoid 768p sets in favor of 1080p sets. If you do have a 768p monitor or television, the Xbox 360 offers 1360×768 as an output format over HDMI. While strictly speaking this isn’t an exact match to the pixel array of your display, the difference is negligible (there will be an imperceptibly thin border of unused pixels along the side). The X360 also offers a number of other resolution sizes for a variety of monitors, including WVGA (848×480), XGA (1024×768), WXGA (1280×768), SXGA (1280×1024), WXGA+ or WSXGA (1440×900) and WSXGA+ (1680×1050). As above, you should choose the resolution closest to your display, regardless of the game you’re playing.

Note: There’s one fundamental difference between console and PC gaming as regards resolution. While PC games are resolution-independent, disc-based console games are optimized for the fixed hardware of the console platform. So while PC games can be rendered up to whatever resolution your graphics card can handle, console games are programmed to run at a native resolution, which is then upscaled in hardware (if necessary) to the chosen output resolution of the Xbox 360.

HDMI Color Space

When HDMI output was first introduced with the Elite console in 2007, only the Reference Levels setting was available and the Xbox 360 only output RGB. A later system update added the full suite of color space options:

- Auto

- Source

- RGB

- YCbCr601

- YCbCr709

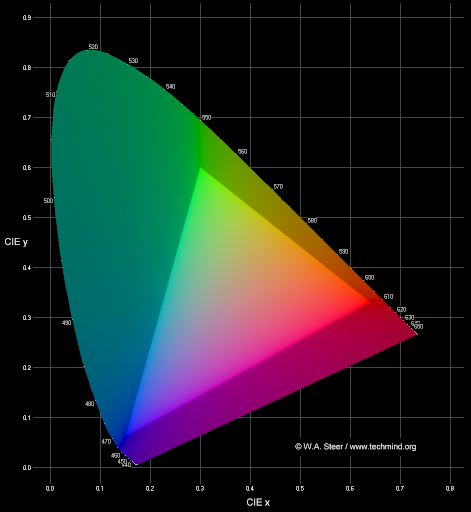

A color space is simply a mathematical representation of color based on a particular color model. Color spaces designed for the visual display industry are all based on the additive RGB color model, which is itself derived from the trichromatic visual system encoded in human biology. Since we want our reproduced images to be as lifelike as possible, it only makes sense that our imaging systems would be modeled after our tristimulus architecture.

It all started in the late 1920s with novel research by two vision scientists who conceived of a method for specifying color sensation in numerical terms. Building from experiments done by William David Wright and John Guild, the International Commission on Illumination (CIE) devised the first ever color space by plotting visible wavelengths of light in a three-dimensional figure. That figure, intended to encompass the full gamut of human vision, is the 1931 CIE XYZ color space. We’ve been refining and expanding upon this opening effort ever since as we’ve learned more about the eye’s sensitivity to different wavelengths and our technological capabilities have improved.

Our drive for perceptual uniformity has turned out dozens of different color spaces and several packaging methods for optimizing color interpretation and reproduction across the imaging chain. Some modern examples of color spaces at the consumer and professional level include sRGB, scRGB, xvYCC, Adobe RGB and Adobe Wide Gamut RGB. Regardless of which color space is used, the colorimetric data must ultimately be converted to RGB so your HDTV or monitor can paint the image on-screen. Again, this makes sense because a display’s pixels themselves are constituted of red, green and blue components—the universal “color language” of humans and, hence, of visual displays.

Chroma Subsampling

Due to storage and bandwidth limitations in the analog era, the broadcast industry adopted compression techniques for reducing the size of the video signal. This was accomplished by applying a kind of triage to perceptual relevance. Because the human visual system is much more sensitive to variations in brightness than color, a video system can be bandwidth-optimized by devoting more overhead to the luma (brightness) component (denoted Y’) than to the color difference components, Cb and Cr (collectively, chroma). This technique, called chroma subsampling, is where you get YCbCr (often abbreviated to YCC). As an alternative to sending uncompressed RGB, YCbCr is a common compression format used to encode data within a particular color space and results in negligible visual difference.

So practical was YCbCr that the broadcast industry embraced it and never looked back. The ITU-R adopted it as the primary encoding scheme for use in their Rec. 601 and, later in 1990, Rec. 709 standards that govern electronic broadcasting. While modern digital distribution systems are not as capacity-conscious as legacy analog systems, there is still a need for video and color compression in order to allow dense 1080p video to be stored on a Blu-ray Disc, for example, or HD streams to be squeezed through crowded networks. So although the bandwidth of an HDMI cable is more than adequate for carrying uncompressed (4:4:4) RGB color format, there is still the twin bottleneck of optical disc media and internet infrastructure to contend with on the horizon. Today, all cable, satellite and OTA (over-the-air) video streams, DVD and Blu-ray titles, along with Netflix and other streaming services, store color information using this YCbCr format.

Rec. 601 and Rec. 709

The Rec. 601 and Rec. 709 standards specify, among other picture parameters, formulas for converting YCbCr to and from RGB. As the maths are different, the Xbox 360 provides both options and you will want to use the appropriate format for the content you are viewing.

For standard-definition video, such as DVDs and 480i/p content streams, you should use YCbCr601, which outputs the official Rec. 601 color space standard. For all HD video, including 720p/1080i/p video streams, you should use YCbCr709, which outputs the color coordinates for Rec. 709. The color decoders in most HDTVs can handle both matrices, so they will detect the incoming color space flags and perform the proper conversion to RGB without a hitch.

RGB

While video-based content speaks in YCbCr language, games are an entirely different animal. Naturally, different standards and recommendations apply. Similar to PC, console games are internally rendered by the GPU in native 8-bit RGB. This is true for games as well as the X360 dashboard, both of which run in native RGB space. Thus, to avoid any unnecessary processing you should always have your Xbox 360 set to RGB for games. Otherwise, the native RGB format is transcoded and resampled to YCbCr, which will then be decoded back to RGB once it reaches the display device. More conversions = increased opportunity for errors and input lag. As RGB is the universal color format of visual displays, all HDMI-compliant sets accept it.

(There really is no good reason why the X360 even allows for YCbCr output for games. In contrast, the PS3 allows games to run in RGB mode only; YCbCr is reserved exclusively for BD/DVD playback. If set to YCbCr, the PS3 will auto-switch to RGB when the game flag is detected, and when playing back Blu-rays you can switch between YCbCr and RGB. My best guess is Microsoft provided this option because the console at one time did not indicate the RGB quantization range correctly for the receiving device, so converting to YCbCr would force the 16-235 range for a display that expects that range—more on this in the Reference Levels section.)

Auto and Source

These two settings attempt to remove some of the guesswork on behalf of the user. Personally, I prefer to take the automaticity away from the machine and manually force the appropriate setting. HDMI negotiations between source and sink aren’t perfect, and mangled communication can de-optimize your setup. So unless specific options aren’t available, I recommend explicitly setting color space and reference levels.

Auto setting selects the color space format which your display is set to receive according to HDMI protocols. The Enhanced Display Data Channel (E-DDC) embedded in the HDMI chip of the X360 queries and reads the E-EDID data from the HDMI sink device (display), and then negotiates the optimal output, usually behaving like the Source setting below. The HDMI Auto setting found in Blu-ray players and other home theater components follow this process as well.

The Source setting adapts to the incoming color space format detected in the signal. If a DVD flag is detected, the X360 should switch to YCbCr601 output. If an HD video stream is detected, it should switch to YCbCr701. For games and the dashboard interface, the X360 will default to RGB.

Color Space Wrap-Up

To wrap up, some HDMI-friendly electronics, like the Xbox 360, offer both YCbCr and RGB output options over HDMI. These are simply different ways of sending the same signal, the former being compressed and the latter uncompressed. For video-based content, one is not necessarily “better” than the other because no matter which you choose, the same conversion steps are happening; all you are choosing is which device is performing the conversion. As you’ll recall, the pixels of a digital display are red, green and blue, so whichever color format is output from a source device, the display must ultimately have an RGB signal to work with before the pixels in the display can be illuminated. This is the same process no matter what display technology is being used, whether LCD, plasma, DLP or CRT.

The only situation in which this may matter (again, for video only) is if the color decoder in the source or sink device is faulty. Converting between RGB and YCC is simple math and is lossless (assuming the software uses enough bits of precision), but I have seen cases in which one device does not handle the conversion properly. (Using test material will help you identify if your setup has any issues of this variety.) If the conversion is handled properly, there should be no visual difference between the two formats.

The choice becomes important, however, in the case of gaming consoles. Since games are rendered natively in RGB, only the RGB setting makes sense here. Assuming there are no color decoding errors bungling the video chain, the principle of sought accuracy suggests sending a YCC signal for video and RGB for games.

Note 1: While NTSC region DVD video follows the Rec. 601 standard, most PAL region DVDs conform to the Rec. 709 standard.

Note 2: For streaming services like Netflix and Hulu Plus that optimize picture quality according to available bandwidth, it is less clear-cut which color standard will be present in the stream. For these services, it is generally best to use the Xbox 360 options Source or Auto, which adapt to the signal’s color space.

Note 3: All Blu-ray media is encoded in 8-bit YCbCr 4:2:0. (The three-part ratio is the subsampling format.) Some players give you multiple color format options, including YCC 4:2:2, YCC 4:4:4 and RGB (4:4:4). One caveat revealed by video technicians Spears and Munsil regards the native processing mode of the chip in the display device. Some HDTV chips are designed for only one color format and will process all incoming formats by first resampling to its native format. For example, a chip designed exclusively for 4:2:2 processing will convert an RGB (4:4:4) signal back to YCbCr 4:2:2, then upsample to YCbCr 4:4:4 before finally converting to RGB (4:4:4). In these cases, it might actually be disadvantageous to decode in the player. Finally, some Blu-ray players actually convert to RGB (4:4:4) before forwarding the signal through the HDMI output, even if no RGB output option from the player is provided. Good test material will aid in sorting out these nuances.

Reference Levels

Now we come to Reference Levels, far and away the most important setting, as choosing incorrectly (i.e., sending the wrong range) can muck up the hallowed black level you paid so much for. Reference Level is Microsoft’s terminology for quantization range, which governs the number of discrete steps or values that can be assigned to a subpixel. There are two and only two ranges used for visual displays. The first, 0-255, is formally known as PC or IT quantization range (used in computing, including PC hardware, gaming consoles and computer monitors). The second, 16-235, is formally known as CE or video quantization range (used in televisions and all commercial video).

First, a quick word about terminology. It is unfortunate indeed that equipment manufacturers don’t use consistent labels for these settings. Samsung, Microsoft, Panasonic, LG, etc. seem to all use different names. The easiest solution it seems to me is to just write out the range in the menus: 16-235 or 0-255. Alas, numbers can be scary and so most manufacturers use their own nomenclature. Those proprietary designations that map to 16-235 include Low, Limited, Video and Standard. Those that map to 0-255 include High, Full, Expanded, Extended, Enhanced and Normal.

You’ll note that I specified two ranges above, while Microsoft offers three. Reference Level Standard corresponds to 16-235, Expanded to 0-255, and Intermediate, well, Intermediate is not a standardized range at all. Microsoft appears to have invented this setting out of thin air. According to histogram testing, Intermediate outputs somewhere in the ballpark of ~8…~245, so perhaps it was included as a compromise solution for people who can’t resolve the right negotiation for their setup. At any rate, Intermediate should only be used as a last-resort stopgap for deficiencies existing elsewhere in the imaging chain.1

0-255 vs. 16-235

Both PC and CE quantization range are based on 8-bit color depth. An 8-bit color depth allows for 256 possible gradations of color (2^8), varying from black at the weakest intensity (0) to white at the strongest intensity (255). The only difference between the two ranges is where reference black and white are positioned. With PC range, 0 is reference black and 255 is reference white, for a total of 256 discrete steps. CE range, on the other hand, places reference black at 16 and reference white at 235 along the same 0-255 scale, leaving 220 discrete steps to which values may be assigned.

Why the inconsistency? Ask the video engineers. Due to limitations intrinsic to video broadcasting—from capture to encoding to transmission—the final signal may not always fall neatly into the specified range. For a variety of technical reasons, data intended for grayscale level 2, for example, may slip to level 0 or be clipped altogether by the time it reaches your display. This is much less of a concern in the all-digital distribution pipelines of today, but engineers decided at the time on a 16-235 range, building headroom (235-254) and toeroom (1-15) around those upper and lower values so signals slipping outside the nominal range could still be reproduced by the end user if desired.

In the land of video, 16 and 235 are reference levels, not hard limits. Any data below level 16 is referred to as blacker-than-black (BTB), and any data above level 235 is referred to as whiter-than-white (WTW). (Note that these terms only apply when discussing video/CE range.) 0 and 255, on the other hand, are indeed hard limits.

It is important to understand that 0-255 does not result in “deeper blacks” or “brighter whites” compared with 16-235. Both ranges simply possess a different number of tonal gradations between reference black and reference white. As long as the display is set to receive the range the source device is sending, reference black and reference white for both ranges are the same intensity to the end user (i.e., black level 0 in PC mode should appear the same as black level 16 in video mode).

All of this has important implications for the type of content you’re sending to your screen. Anything you watch on cable or satellite, anything broadcast OTA, DVD and Blu-ray movies, and Netflix and other streaming media are all mastered according to the 16-235 CE range. We can now complete the circle we began in the section on color space above. You’ll recall that all of the same media is stored using YCbCr. It turns out that the HDMI spec stipulates CE range (video levels) for the YCbCr format.

Section 6.6 of the HDMI Spec reads:

Thus, YCbCr is limited range (16-235) by design. Because of this, it is unaffected by the Reference Level setting on the Xbox 360. And since YCbCr cannot be output at PC range, the Reference Level setting affects RGB output only. You can test this for yourself. Switch to YCbCr mode and toggle between the three Reference Levels; no effect. It would be less confusing for consumers if the option was simply grayed out when in YCbCr mode. Unfortunately it’s not, resulting in many a gamer straining to pick up differences that aren’t there.

For games, it’s a different story. They don’t use YCbCr. As with video-based material above, we can now complete the circle. Game graphics are rendered in the GPU’s frame buffer at PC range (0-255). (I’m not aware of any GPUs that can even output native 16-235 range.) Thus, everything other than video is rendered natively in 0-255 RGB space, making RGB Expanded the preferred setting for games. Using 0-255 end-to-end can also help reduce banding in-game due to the additional levels of gradation available. If instead you set your X360 to Standard, this will require the GPU to render at 0-255 which will then be requantized to 16-235 upon output. Configuring the console for 0-255 (Expanded) will avoid this unnecessary step.

Source and Sink Agreement

The ultimate objective here is to ensure that source and sink, and everything in between, are in agreement. This will ensure reference black and white output from the source are in sync with the reference tones the display is expecting. Which Reference Level to use becomes an easy answer if the display you are using can only reproduce one or the other. For example, most PC monitors have only one display mode (0-255), so using Expanded here is the obvious choice. As for HDTVs, most top-tier models can switch into both ranges over HDMI. If yours doesn’t have a PC mode or anything with 0-255 in the label, you will be stuck with Standard Reference Level.

Keep in mind that most HDTVs and other home theater equipment are configured for video levels by default. You may need to dig into your menus and toggle it over if you want to use Expanded Reference Level for games. Remember, while full range RGB is the best way to output games from the Xbox 360, it only works if the display expects full range. Make sure they match.

Here’s what to look for. If you send Standard range (16-235) to a display expecting Expanded range (0-255), the image will appear washed out and your contrast ratio will suffer: see Quadrant 3 below. Low-end detail gets the shaft because your 360 is placing black level at 16, while your display is placing black level at 0. Conversely, if you send Expanded range (0-255) to a display expecting Standard range (16-235), you’ll get a mixture of crushed black detail and crushed white detail, not a good combo: see Quadrant 2 below. Your game will look too dark because your 360 is placing black level at 0, while your HDTV is placing black level at 16; thus every gradation between 0 and ~16 is being crushed, and every gradation above 235 is also being crushed. Quadrants 1 and 4 are examples of Expanded-Expanded and Standard-Standard, respectively.

The image below is used here courtesy of NeoGaf forums user Raist in this post.

Bottom line: Regardless of which range you use, what matters is that all devices in your imaging chain are configured for the same range. Provided they are in agreement, there is very little to recommend one range over the other, as any differences are generally attributable to the capabilities of the display, not the range itself. If your display is capable of reproducing full range—without crushing low-end black or high-end white and without clipping the RGB channels—then the ideal scenario is to use full range throughout, since this is how games are developed. If your HDTV is incapable of displaying the full 0-255 range to your liking, simply output Standard from the 360 and keep your TV on 16-235 (which is the default setting for most TVs anyway). Mileages may vary on this score. If you’re curious what your display is capable of, you can find a BTB test pattern here and a WTW test pattern here.

Finally, for those serious about calibrating their display, I cannot recommend enough Spears and Munsil’s immaculate HD Benchmark disc, now in its 2nd edition.

Note 1: Many games offer an in-game brightness tool with instructions for how to pass the pattern. This should be the last thing you touch, if at all. Make sure source and sink are in agreement first and your display’s brightness and contrast are calibrated properly. Then bring up the brightness pattern included with the game. If you’re not able to pass the pattern, then and only then should you adjust the in-game brightness control. This should be done on a case-by-case basis. You should never adjust TV controls to pass an in-game brightness pattern because those settings may be wrong for every other game, movie, etc., requiring constant recalibration. Instead, the preferred approach is to tweak the in-game brightness control per game, if necessary, to bring the game into alignment with your display calibration, not the other way around.

Note 2: If you’re using a receiver or other intermediate device, make sure it doesn’t clip 0-255 to 16-235. Some models are known to truncate whatever signal they receive such that they only pass 16-235.

Note 3: While most video mastering engineers strictly adhere to the 16-235 range, a few may intentionally place detail above 235. For example, white detail in clouds or sky, or white clothing may exceed reference white (235). If your display is calibrated to show a bit of WTW, you will see this extra detail. If the display is not calibrated to show it or is unable to show it, then the detail will be clipped. (Put differently, that image area will be the same grayscale level as your highest distinguishable grayscale level.) Calibrating a peak white level for your display is largely personal preference and you may be limited by the adjustment range of your system as well as your viewing environment. The same applies to BTB data. (This note only applies to video-based material and not to games.)

Note 4: In the PC world, many video cards do not remap levels with especially high precision and could introduce banding in the image. If optimal IQ is desired, reducing the amount of levels conversion will yield the best results. For PC games connected to HDTVs, this means making sure all equipment in the chain maintains 0-255 range. For displaying video-based content on a PC, there are a number of drivers out there that can map to video levels.

Note 5: One of the Xbox 360 system updates borked the reference level settings for the dashboard and a number of media apps (games were unaffected). The issue has now been fixed in most if not all video apps, as well as in the dashboard (bar the picture viewer). Games are still flagged correctly.

Sources

- Understanding Color

- Introduction to Colour Science

- Choosing a Color Space

- A Review of RGB Color Spaces

- HDMI Enhanced Black Levels, xvYCC and RGB

- xvYCC and Deep Color

- YCbCr to RGB Considerations

- Color Subsampling, or What is 4:4:4 or 4:2:2?

- What is YUV?

- Understanding Color Processing: 8-bit, 10-bit, 32-bit, and More

- HDMI 1.2 Specification

- HDMI 1.3a Specification

- Post Processing in The Orange Box

- The ARRI Companion to Digital Intermediate

- Laser TV: Ultra-Wide Gamut for a New Extended Color-Space Standard, xvYCC

- Comparison of HDMI 1.0-1.3 and HDMI 1.4 Usages in the Commercial and Residential Markets

- Spears & Munsil official website

- Unofficial Oppo BDP-83 FAQs

- On the Xbox One, the Color Space Standard setting is the equivalent of the Xbox 360’s Reference Level Limited setting (16-235), while Color Space PC RGB is the equivalent of Reference Level Expanded (0-255). [↩]

Comments