The Science of Why People Reject Science

Accounting for social and ideological factors in science communication can improve the quality of debates and decision-making when it comes to climate change and other hot button scientific issues.

In his 1748 treatise An Enquiry Concerning Human Understanding, David Hume famously wrote, “A wise man…proportions his belief to the evidence.” This sentiment has become a familiar fixture of our modern lexicon, a basic standard for intellectual honesty and a prescription for belief formation in an ideal world.

Of course it was also Hume who in an earlier volume wrote that reason is “the slave of the passions.” The original context of the passage concerns moral action and the precedence of our goals, motives and desires in relation to reason; specifically, Hume considered reason an ex post facto force in thrall to our moral impulses. But this concept can be duly extended to the psychology of belief formation more generally. Indeed, once we factor in culture, ideology, human psychology, and emotionally laden values, the image of the dispassionate creature responsive to the best evidence and argument gets cut down to size.

The ease with which the reasoning self can be subverted should clue us in that there is more going on behind the scenes than cold-blooded evaluation of facts. The prevalence of the triumph of emotion and ideology over reason and evidence is a feature of human psychology we should all be mindful of, but its underlying causes should be of special interest to proponents of science and other veterans of fact-based intervention.

In particular, what we know about cognition has great import for the information deficit model, the quixotic but woefully under-supported idea that the more factual information one is exposed to, the more likely one is to change their mind. As it turns out, our neural wiring tends to lead us in the opposite direction: our emotional attachment to our beliefs and values prods us to double down and organize contradictory information in a way that is consistent with whatever beliefs we already hold — the so-called “backfire effect.” As Chris Mooney put it in 2011: “In other words, when we think we’re reasoning, we may instead be rationalizing.”

I think that on some level anyone who has dealt with science denial for an extended period of time knows this intuitively. Adopting the role of serial debunker, whether online or off, can be a thankless and unrewarding undertaking. We figure that one more “hockey stick” graph, one more image of the vanishing Arctic, one more year of record-topping warmth, one more report on ocean acidification or extreme weather will settle the matter. But it rarely does; discredited claims persist, and frustration sets in.

That these efforts so often end in failure demonstrates the sheer poverty of “Just the facts, ma’am” approaches to persuasion. Summarizing a study on this very topic, Marty Kaplan writes: “It turns out that in the public realm, a lack of information isn’t the real problem. The hurdle is how our minds work, no matter how smart we think we are.” While access to more and higher quality information sounds like a fundamental feature of consensus building, it ultimately matters less than how we situate that information within our preexisting social matrix.

This is not to say that fighting denial with facts never works, or that people are incapable of changing their minds on anything of consequence — it can and we are. For instance, someone who is already well trained in logic and critical thinking and evidence-based evaluation of claims in other contexts may yield to the facts in a different context even when their beliefs are misaligned. This does, however, appear to be the exception rather than the rule. For the most part, the rationality we so aspire to all too often succumbs to inherent constraints and limitations in our cognitive makeup.

What’s become increasingly clear is that scientific literacy alone can’t solve the problem. Indeed, the reason clashes with creationists and climate deniers have us banging our collective heads against the wall is not because we don’t have the facts on our side. It’s because we’re bumping up against deeply entrenched cultural norms and attitudes. Gaining traction in disputes over settled science, it seems, is less about closing the information gap than about translation of the science such that the recipient can process the information without feeling like their social identity and worldview are at risk. Science denial thus stems from the felt sense that scientific beliefs are incompatible with the received wisdom of one’s social sphere.

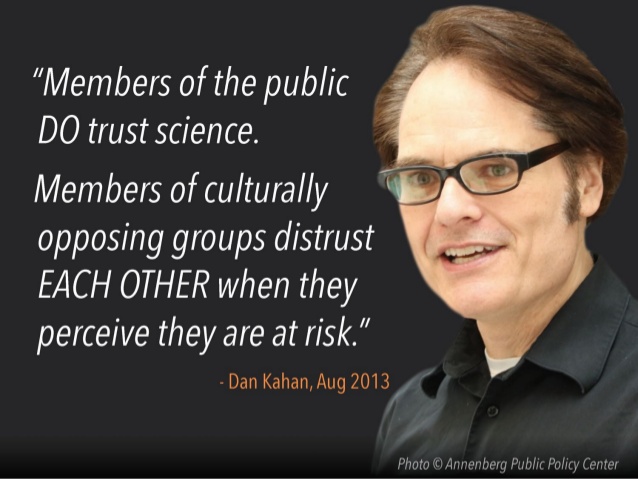

The research supporting this view is now extensive. One of the pioneers at the forefront of the connection between beliefs, evidence and culture — what we might call ‘value-based belief formation’ — is a psychology professor at Yale by the name of Dan Kahan. The theory he and his colleagues have proposed as a more robust alternative to the deficit model is identity-protective cognition, otherwise known as cultural cognition.

According to Kahan and others, we perceive and interpret scientific facts largely as symbols for cultural affinities. Especially certain scientific facts — think climate change, evolution, GMOs, stem cell research, vaccination — by dint of being politicized, carry cultural meaning that has nothing to do with the validity of the underlying science. Depending on the strength of one’s political biases, facts may never enter the analysis at all, except to be argued against and swept aside in order to reinforce a fixed ideological position. The more invested we are in a certain cultural identity, and the more politicized the scientific issue, the higher the frequency at which these impulses operate.

Associating with beliefs that are out of step with one’s social group, moreover, bears societal and interpersonal risks (e.g., loss of trust from peers) to members for whom ideology or ‘party’ has become a deep and meaningful part of their self-concept. This consequently prompts the identity-protective mechanism: we selectively credit and discredit evidence in response to those risks. In short, objective evidence is merely subjective fuel for the ideologically beholden. The psychology of group affiliation and competition for social status frequently override rational assessment of scientific knowledge when it comes to climate change, evolution, vaccine safety, and so forth.

TLDR – It’s about cultural identity and values, not facts. Echoing Hume, we are not ruled by reason.

I see Kahan & Co.’s research as a powerful commentary on the state of hyper-polarization of American politics and culture, and a cogent, if ineluctably depressing, counter-narrative to the information deficit model practiced by so many science communicators today. This leaves us on somewhat insecure footing. We cannot allow misinformation to spread unchallenged, but neither can we continue to labor under the faulty expectation that dousing our debates with more facts and figures will fan the flames of denial. To the extent this body of research provides an accurate picture of the referent under study, we would do well to absorb its insights and apply them in the arena of partisan politics and antiscience contrarianism.

As Chris Mooney wrote in 2012: “A more scientific understanding of persuasion, then, should not be seen as threatening. It’s actually an opportunity to do better — to be more effective and politically successful.” Charging forward with false notions of human psychology dooms our efforts before they get off the ground. That our ideological commitments often prod us to double down and organize dissonant information in ways that cohere with the expectations of our cultural group — and, indeed, that the more informed and literate we are the more susceptible we are to this phenomenon — is invaluable intel in countering the war against facts.

What does this look like in practice? The strategy urged by Kahan and his colleagues is to lead with values, appeal to common concepts and desires, and emphasize shared goals. Scientific minutia is no one’s friend in these conversations. Instead of a rote rehearsal of the facts, explain why those facts should matter to the listener. Especially avoid framing issues in terms of left vs. right or science vs. denialism. In essence, steer the discussion away from ideological pressure points that are likely to trigger ingroup-outgroup dynamics.

By no means is this an easy or surefire path to success. For one, it requires much more preparation in terms of tailoring your arguments to your audience. But the evidence suggests this approach is more productive than throwing fact after fact at the wall and seeing what sticks.

Some have taken cultural cognition theory to imply that facts are culturally determined — to support a kind of postmodernist understanding of truth. This couldn’t be more mistaken. Identity-protective cognition is an explanatory model for how beliefs — particularly those existing at the intersection of science and politics — are formed. It is both descriptive and prescriptive: it contends that our cultural experiences and personal identity influence the way we approach and interpret facts, and points us toward new modes of engagement. It should not be construed as a recommendation for how we are to form reliably accurate views about the world, abandoning fact-based decision making, or embracing a post-truth era. Scientific facts are still culturally independent descriptions of nature, and the physical laws of the universe don’t change depending on who’s measuring them.

Rather, this research, like all good psychology, brings to light imperfect manifestations of our innate cognitive circuitry. The more we learn about cognition, the more fleeting rationality and reasoned thinking appear to be, and the more vigilant we must be to avoid the common pitfalls so ingrained in our neurochemistry. After all, the penchant for tribalism and partisanism are more akin to features than bugs in the human operating system; such shortcomings have been with us from the beginning. Only by recognizing these features and adopting communication strategies that account for them can we hope to effectively engage lasting resistance to established science and help guide society out of the pre-Enlightenment era to which we seem to be regressing.

Assembled below are a collection of studies and articles on cultural cognition:

The Cultural Cognition Project at Yale Law School

Cultural Cognition of Scientific Consensus (mirrored here; pdf). Kahan et al. 2010:

The Tragedy of the Risk-Perception Commons: Culture Conflict, Rationality Conflict, and Climate Change. Kahan et al. 2011:

The polarizing impact of science literacy and numeracy on perceived climate change risks. Kahan et al. 2012:

Striving for a Climate Change:

Fixing the communications failure. Kahan 2010:

Most Depressing Brain Finding Ever (study by Kahan et al. 2013 here):

The Meaning of Scientific “Truth” in the Presidential Election. Kahan 2016:

The Hyper-Polarization of America:

The Science of Truthiness: Why Conservatives Deny Global Warming:

The ugly delusions of the educated conservative:

The Science of Why We Don’t Believe Science:

You can follow the logic to its conclusion: Conservatives are more likely to embrace climate science if it comes to them via a business or religious leader, who can set the issue in the context of different values than those from which environmentalists or scientists often argue. Doing so is, effectively, to signal a détente in what Kahan has called a “culture war of fact.” In other words, paradoxically, you don’t lead with the facts in order to convince. You lead with the values—so as to give the facts a fighting chance.”

This post was featured on HuffPost’s Contributor platform.

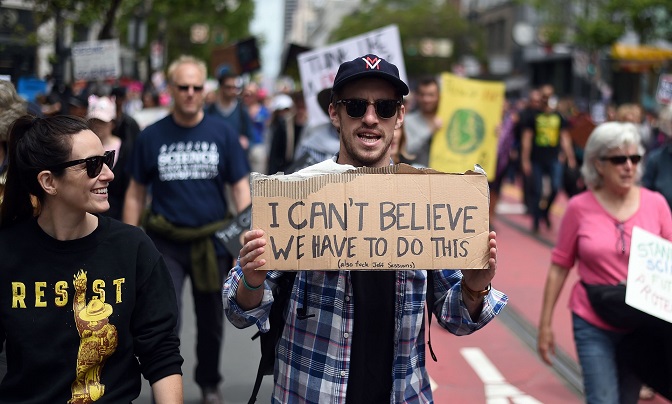

Feature image via The Guardian; photo credit: Josh Edelson/AFP/Getty Images

Comments